Abstract

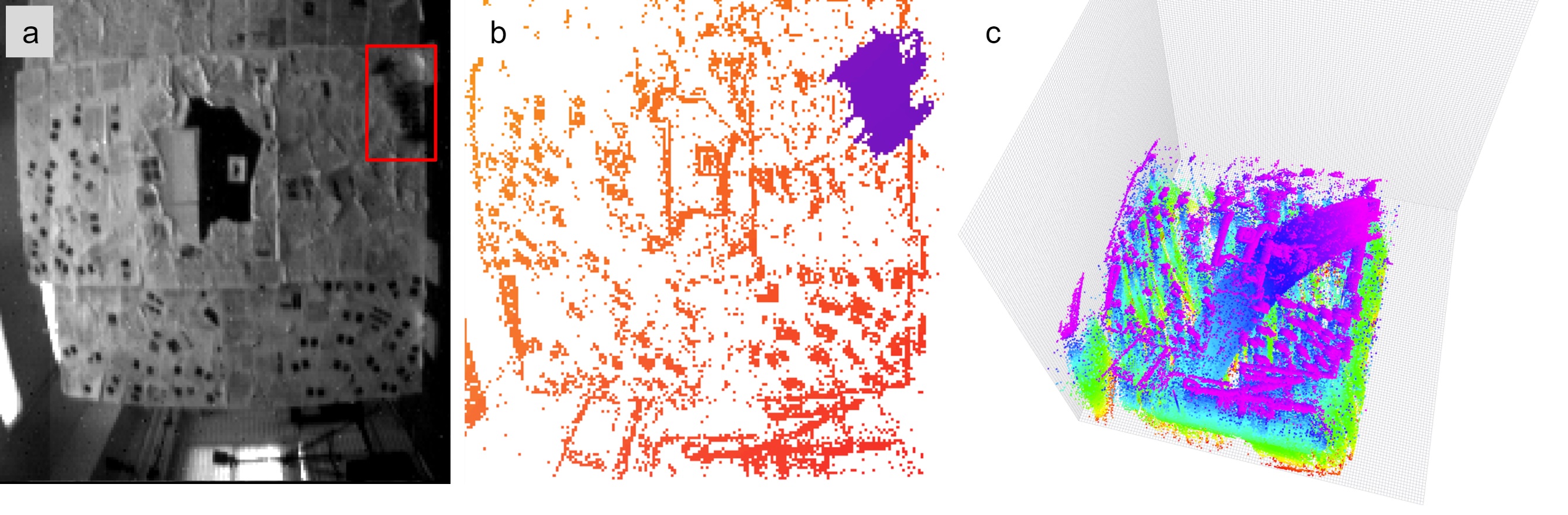

Fig. 1. Different motion representations acquired from the DAVIS sensor: (a) Grayscale image from a frame-based camera (red bounding box denotes a moving object). (b) Motion-compensated projected event cloud. Color denotes inconsistency in motion. (c) The 3D representation of the event cloud in (x, y, t) coordinate space. Color represents the timestamp with [red - blue] corresponding to [0.0 - 0.5] seconds. The separately moving object (a quadrotor) is clearly visible as a trail of events passing through the entire 3D event cloud.

Event-based vision sensors, such as the Dynamic Vision Sensor (DVS), are ideally suited for real-time motion analysis. The unique properties encompassed in the readings of such sensors provide high temporal resolution, superior sensitivity to light and low latency. These properties provide the grounds to estimate motion extremely reliably in the most sophisticated scenarios but they come at a price - modern eventbased vision sensors have extremely low resolution and produce a lot of noise. Moreover, the asynchronous nature of the event stream calls for novel algorithms. This paper presents a new, efficient approach to object tracking with asynchronous cameras. We present a novel event stream representation which enables us to utilize information about the dynamic (temporal) component of the event stream, and not only the spatial component, at every moment of time. This is done by approximating the 3D geometry of the event stream with a parametric model; as a result, the algorithm is capable of producing the motion-compensated event stream (effectively approximating egomotion), and without using any form of external sensors in extremely low-light and noisy conditions without any form of feature tracking or explicit optical flow computation. We demonstrate our framework on the task of independent motion detection and tracking, where we use the temporal model inconsistencies to locate differently moving objects in challenging situations of very fast motion.

Paper

|

Anton Mitrokhin, Cornelia Fermüller, Chethan Parameshwara, Yiannis Aloimonos. |