Abstract

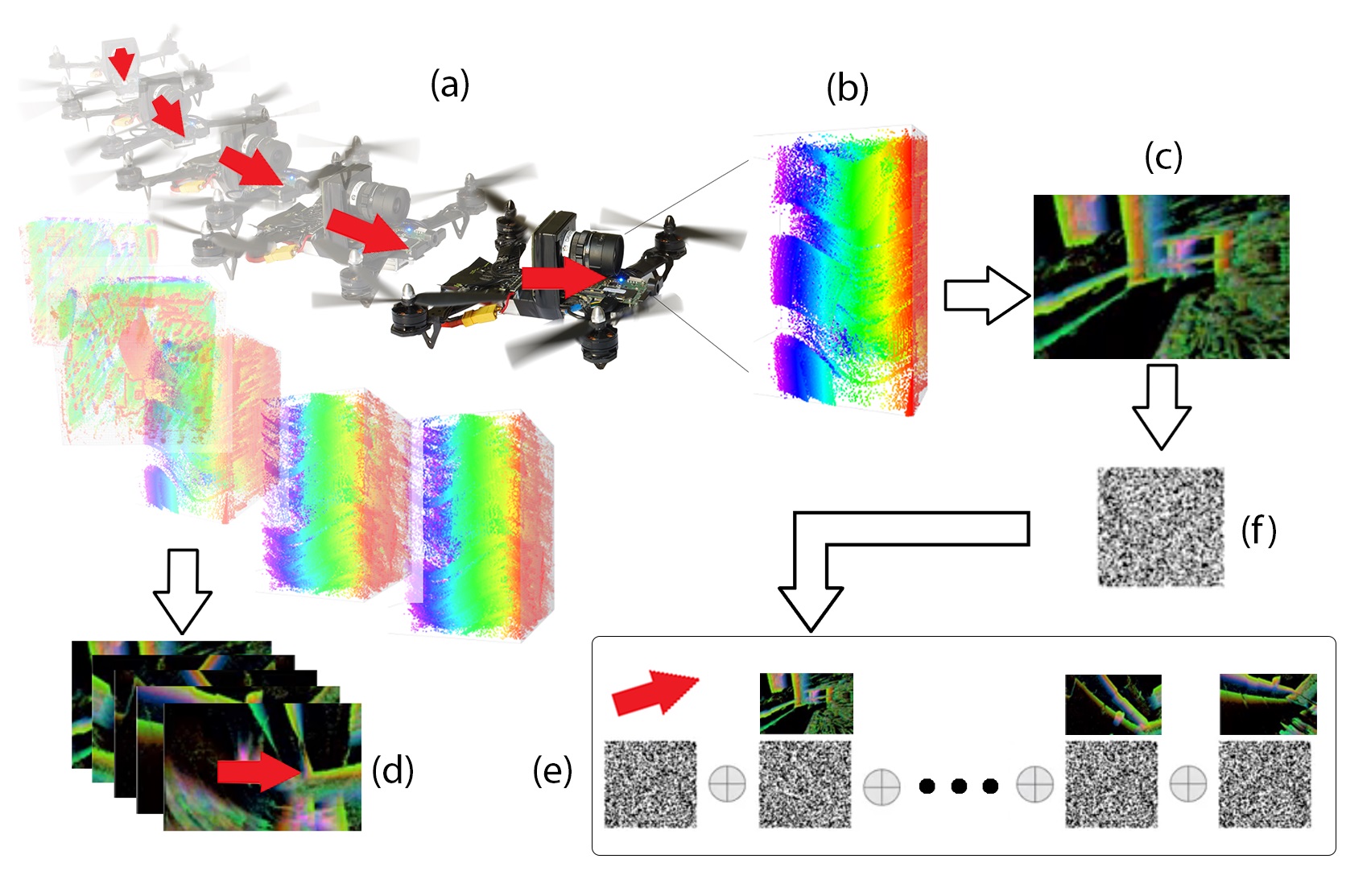

Fig. 1. (a) A drone flies in a particular pattern, generating events (rapid changes in pixel intensity) on the DVS camera and velocity vectors signifying the direction it is travelling in. (b) Drone flight creates events, visualized as a cloud of 2D pixels in time (darker colors are older events), with time as the 3rd dimension. (c) Events are used to generated special images, called time slices, that show motion over a period of time as a blurring effect. (d) Time slices are paired with direction of travel of the drone to generate data of how to navigate an environment. (e) Each time slice instance of a particular velocity is turned into a special long binary vector (shown as a square black/white image here) that encodes the motion information. These are stacked on top of each other via a special operation, along with a unique long binary vector identifying the direction, into a single long binary vector encoding. This encoding forms a memory of every time the drone ever moved in that direction. (f) Given a new observed time slice, a drone can predict how to move by recalling the velocity vector. This is done by finding the long binary vector that contains the most similar instance of a time slice. Similarity here is simply the closest vector in Hamming Distance.

The hallmark of modern robotics is the ability to directly fuse the platform's perception with its motoric ability - the concept often referred to as active perception. Nevertheless, we find that action and perception are often kept in separated spaces, which is a consequence of traditional vision being frame based and only existing in the moment and motion being a continuous entity. This bridge is crossed by the dynamic vision sensor (DVS), a neuromorphic camera that can see the motion. We propose a method of encoding actions and perceptions together into a single space that is meaningful, semantically informed, and consistent by using hyperdimensional binary vectors (HBVs). We used DVS for visual perception and showed that the visual component can be bound with the system velocity to enable dynamic world perception, which creates an opportunity for real-time navigation and obstacle avoidance. Actions performed by an agent are directly bound to the perceptions experienced to form its own memory. Furthermore, because HBVs can encode entire histories of actions and perceptions - from atomic to arbitrary sequences - as constant-sized vectors, autoassociative memory was combined with deep learning paradigms for controls. We demonstrate these properties on a quadcopter drone ego-motion inference task and the MVSEC (multivehicle stereo event camera) dataset.

Paper

|

Anton Mitrokhin*, Peter Sutor*, Cornelia Fermüller, Yiannis Aloimonos.

* Equal Contribution |

|

|

|