Robots are active agents that operate in dynamic scenarios with noisy sensors. Predictions based on these noisy sensor measurements often lead to errors and can be unreliable. To this end, roboticists have utilized fusion methods using multiple observations. Lately, neural networks have dominated the accuracy charts for perception-driven predictions for robotic decision-making and often lack uncertainty metrics associated with the predictions. In this paper, we present a mathematical formulation to obtain the heteroscedastic aleatoric uncertainty of any arbitrary distribution without prior knowledge about the data. The approach has no prior assumptions about the prediction labels and is agnostic to network architecture. Furthermore, our class of networks -- Ajna, adds minimal computation and requires only a small change to the loss function while training neural networks to obtain uncertainty of predictions, enabling real-time operation even on resource-constrained robots.

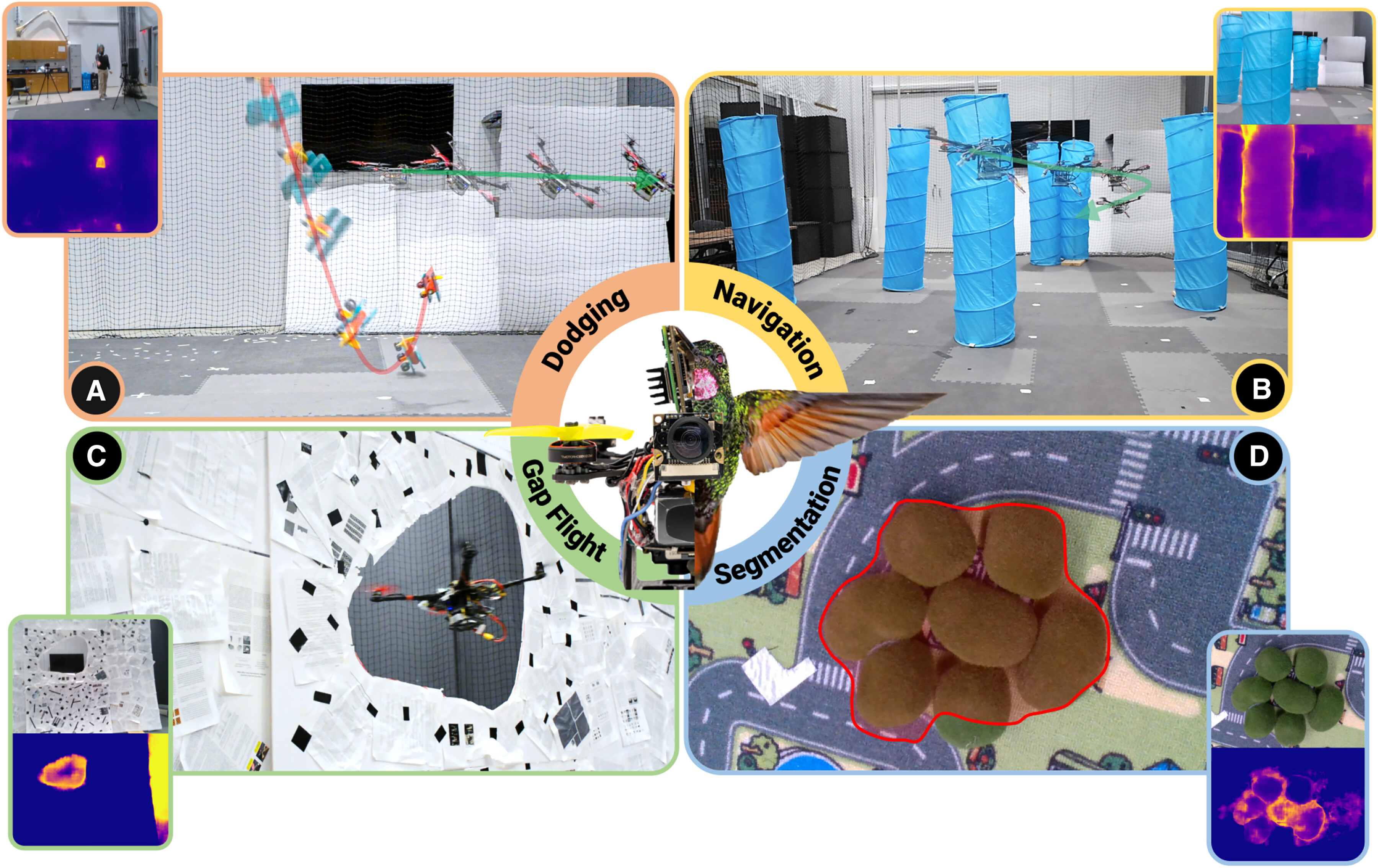

We call our class of networks Ajna after the third eye of Lord Shiva from Hindu Mythology which refers to the eye of wisdom/consciousness/intuition since our networks can `see' (predict) where they might not work well. Additionally, we study the informational cues present in the uncertainties of predicted values and their utility in the unification of common robotics problems. In particular, we present an approach to dodge dynamic obstacles, navigate through a cluttered scene, fly through unknown gaps, and segment an object-pile, without computing depth but rather using the uncertainties of optical flow obtained from a monocular camera with onboard sensing and computation. We successfully evaluate and demonstrate the proposed Ajna network on four aforementioned common robotics and computer vision tasks and show comparable results to methods directly utilizing depth. Our work demonstrates a generalized deep uncertainty method and demonstrates its utilization in robotics applications.

Figure: Unification of common robotics problems using our generalized uncertainty formulation demontrating: (a) Dodging dynamic objects, (b) Flying through unstructured environments, (c) Flying through unknown gaps and (d) Segmentation.