Abstract

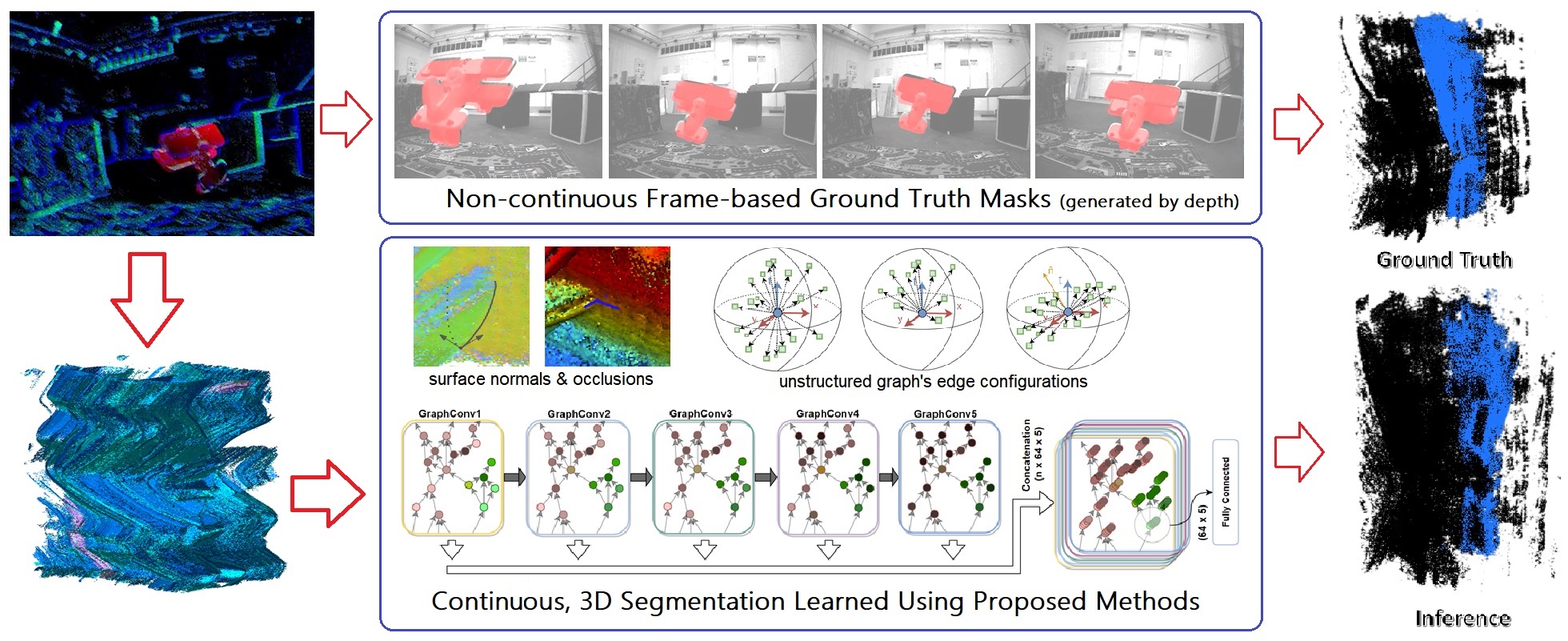

Fig. 1. An abstract of our general pipeline. Top portion showing that we used the Vicon motion capture system to generate ground truth object segmentation masks and render them into 3D. Bottom portion showing the event-based camera recording is first converted into 3D point cloud, then built into a unstrctured graph with different edge configurations and estimated surface normals, allowing our Graph Convolution Network to make efficient and accurate inferences.

Event-based cameras have been designed for scene motion perception - their high temporal resolution and spatial data sparsity converts the scene into a volume of boundary trajectories and allows to track and analyze the evolution of the scene in time. Analyzing this data is computationally expensive, and there is substantial lack of theory on dense-in-time object motion to guide the development of new algorithms; hence, many works resort to a simple solution of discretizing the event stream and converting it to classical pixel maps, which allows for application of conventional image processing methods.

In this work we present a Graph Convolutional neural network for the task of scene motion segmentation by a moving camera. We convert the event stream into a 3D graph in (x, y, t) space and keep per-event temporal information. The difficulty of the task stems from the fact that unlike in metric space, the shape of an object in (x, y, t) space depends on its motion and is not the same across the dataset. We discuss properties of of the event data with respect to this 3D recognition problem, and show that our Graph Convolutional architecture is superior to PointNet++. We evaluate our method on the state of the art event-based motion segmentation dataset - EV-IMO and perform comparisons to a frame-based method proposed by its authors. Our ablation studies show that increasing the event slice width improves the accuracy, and how subsampling and edge configurations affect the network performance.

Paper

|

Anton Mitrokhin*, Zhiyuan Hua*, Cornelia Fermüller, Yiannis Aloimonos.

* Equal Contribution |

|

|

|

|

|